post #4: german green hydrogen biz eyes proto-nazi death camp island for new factory site

may 22nd, 2024

new human remains are still being discovered on namibia’s shark island, but german green energy goals have to be met

A Namibian-registered German company is trying to build a green ammonia/”clean energy” facility in Namibia on an island that also happens to be the site of a concentration camp from the 1900s where some 3,000 Namibians were murdered by colonizers in a systematic genocide. German colonizers, in a German genocide, for which the descendants of genocide victims still haven’t received reparations.

Shark Island is a windy protrusion off Namibia’s southern Atlantic coast that carries somber meaning for the Ovaherero and Namaqua peoples. After both groups separately rebelled against the vicious German colonial apparatus in the summer of 1904, the nearly uninhabitable island was reinvented as a proto-Nazi death camp. Some of the island's 3000 victims were reputedly thrown into the sea to be eaten by sharks after death. Like the "Final Solution" 35 years later, the last phase of the Namibian genocide was its most deadly. Shark Island is the best-preserved of the six death camps where the rebels, along with the rest of their communities, were abused, starved, frozen, and worked to death building a German railway line to the mainland--all part of a genocide that eventually took as many as 100,000 Namibian lives.

The historical significance of Shark Island goes beyond modern Namibian borders, however. The Germans used these death camps to hone the strategies of systematized violence they would later wield on Jews, Roma, Sinti, communists, queer people, and other undesirables under the Nazi regime. The racist violence also reinforced the ideology that would become a tenet of the Nazi worldview. One soon-to-be Nazi medical scientist, Eugen Fischer, began his career in medical experimentation on dead and dying Herero and Namaqua prisoners on Shark Island. Thus Shark Island actually holds the memory of two genocides: the resting place of the Ovaherero and Namaqua victims of German genocide also served as an incubator for the extreme racism that would be used to justify the Holocaust.

A new analysis from nonprofit Forensic Architecture of the sites of the worst brutality suggests the physical traces of colonial violence still permeate those spaces, despite systemic underfunding of their preservation. The investigation suggests Shark Island still hosts the unmarked graves and unburied remains of the victims of the death camp. Many Namibians and human rights advocates have called for a planned expansion of the port on Shark Island—now properly Shark Peninsula—to be halted in light of these grisly discoveries. Human remains have also been found in the poisoned wells of the Omaheke Sandveld. Investigators also note that the evidence suggests the death toll of the genocide could be much higher than the prevailing estimates.

"While Germany has in recent years accepted moral responsibility for the genocide, they disclaim legal responsibility, avoiding the obligation to pay reparations and facilitate restitution," explain the Forensic Architecture authors. "Across Namibia, monuments honour the perpetrators of genocide, mass graves of victims are unmarked, and sites of atrocity fall into ruin. The majority of the country’s viable land is owned by white descendants of European colonists while Black descendants of genocide victims live in intergenerational poverty.”

(Watch the full video from Forensic Architecture on the physical remnants of German colonialism in Namibia here.)

That hasn't stopped the Namibian government from seizing all opportunities to develop the island. Hyphen Energy, a German company with a new Namibian partnership, fulfills both the German national goals of clean energy investment and the Namibian goals of clean energy development. “At full scale development, the project will produce 2 million tonnes of green ammonia annually before the end of the decade for regional and global markets, from ~7GW of renewable generation capacity and ~3GW of electrolyser capacity, cutting 5-6 million tonnes (annually) of CO2 emissions, with Namibia’s annual 2021 emissions totalling 4.01 million tonnes.” The Hyphen website also has the audacity to pitch Namibia as an ideal site for a green hydrogen project because of its low population. It was, of course, colonial starvation and brutality that made the second most sparsely populated country. It was colonial armies who made huge swaths of ancestral lands unusable for generations by working the fragile soils to the point of aridity and literally poisoning village wells.

The lasting socioeconomic effects of the genocide is part of why Namibian chiefs previously rejected Germany’s offer of a 1bn euro "apology" in 2021. Not only was the number insultingly low, a testament to Germany's refusal to accept legal (and therefore economic) responsibility for its campaign, but it reflected the German authorities' disinterest in reevaluating the human cost of the genocide in accordance with modern data. Reparations are still under discussion and the second phase of Forensic Architecture's investigation is now underway, but it remains to be seen whether this glimpse into the true scale of a historical horror will force a reckoning between descendants of perpetrators and of victims and survivors.

post #3: tumblr ceo has meltdown, reveals history of systemic transmisogyny, shits pants

february 27th, 2024

matt mullenweg's fragile ego ushers in a new wave of harassment for trans women users

trans women and trans femmes have called out tumblr's evident transmisogyny for over a decade. openly trans users, especially Black women and sex workers, are routinely booted from the platform for innocuous posts under the guise of "community guidelines". a few days ago, trans user @predstrogen made a joke the new CEO didn't like. in the meltdown that followed, matt mullenweg not only axed OP's account for what was clearly a facetious statement, but went on to violate data privacy law by stalking tumblr users who had reblogged the joke approvingly (all trans women) onto other platforms. you can read @predstrogen's full goodbye letter here.

maia arson crimew (on tumblr @nyancrimew) pointed out an immediate uptick in the normally predictable flow of transmisogynistic hate mail it receives, including messages that specifically referenced mullenweg's failprop campaign following tumblr's expulsion of @predstrogen.

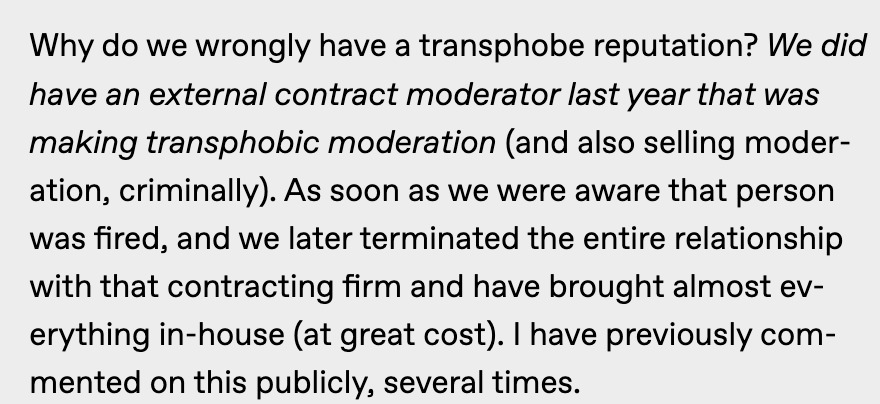

bizarrely, in one of many (as yet undeleted, but we'll see) posts on the topic on his tumblr, mullenweg appeared to confirm long-held suspicions that tumblr's moderation had been transphobic by design during a period that ended years before mullenweg's tenure. during that time, transphobic moderators used tumblr as a site for their personal vendettas against trans femmes. by reporting fully clothed selfies of trans femmes as 'sexually explicit', those moderators expelled countless members of the tumblr community during their reign. they also contributed to a widespread culture of harassment towards trans women on tumblr.

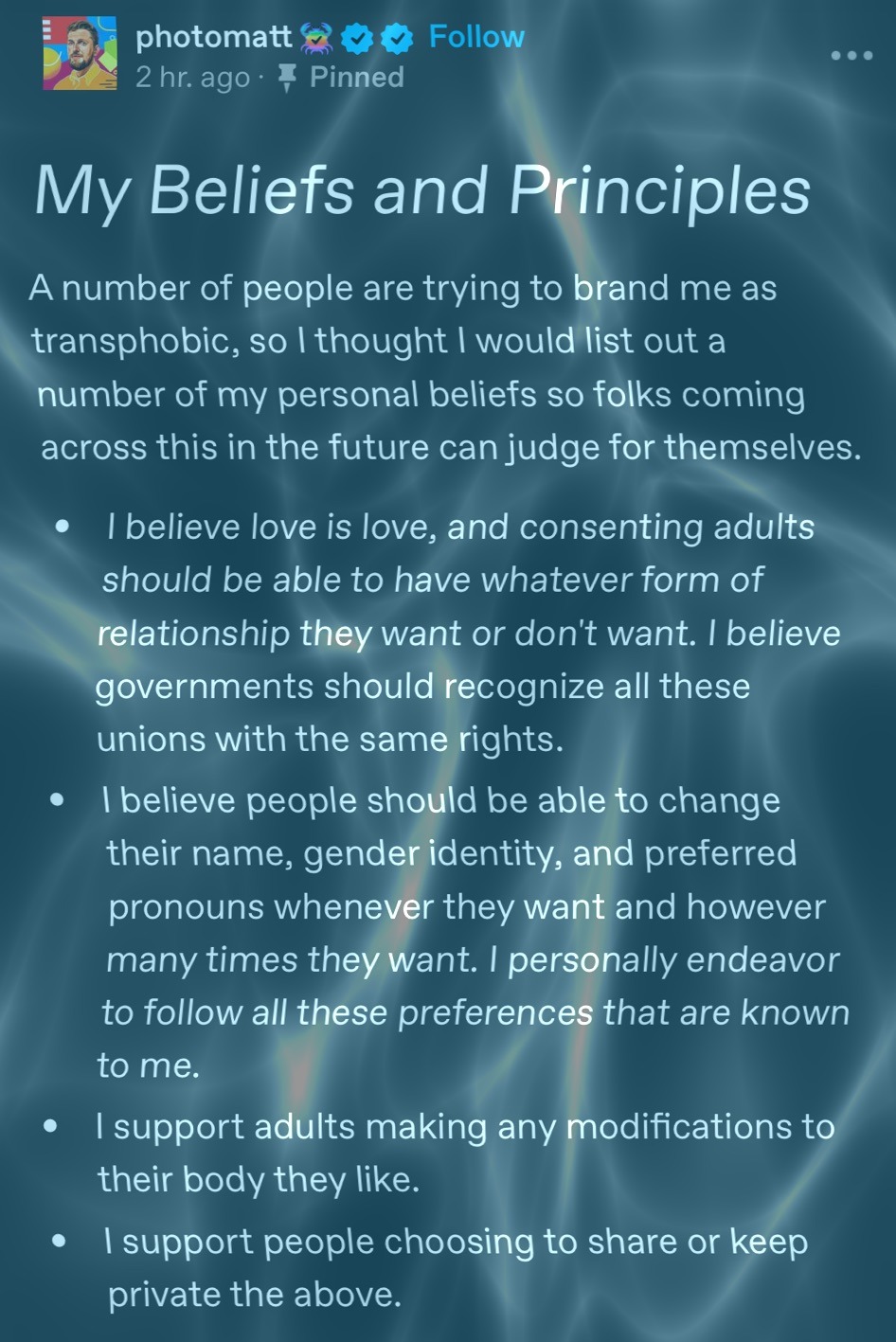

in response to users' demands for an explanation, mullenweg updated his profile to include a pinned post that outlines his personal values. because what trans sex workers who lose access to their primary means of income really want is to know that the cis man who could have reversed it believes in Freedom of Body Modification. what really matters to him is not the impact his company's policies have on the lived experiences of trans users, but that everyone on the internet knows that he, matt, is personally a really nice guy.

as trans tumblr user @kalamity-jane wrote, "Matt's "principles and beliefs" are nothing more than a meaningless genuflection because it's motivated not by the selfless desire to make us feel safer here, it's motivated by his need for us to think he's actually a "good boy" and this all just a misunderstanding."

Posts are disappearing as mullenweg moves into Phase: Failspin and tries to cover his ass, but unfortunately for him, tumblr's receipts game-- like its hate mail game-- is famously unmatched.

UPDATE: on sunday @staff posted a statement that appears to directly contradict much of what mullenweg has said in the days since @predstrogen's removal. calling themselves "a few of the trans staff at tumblr and automattic", the authors lamented an alleged misrepresentation in @predstrogen's original removal notice that made the case appear more transmisogynist than it really was. they then say of the ceo's response: "Matt thereafter failed to recognize the harm to the community as a result of this suspension. Matt does not speak on behalf of the LGBTQ+ people who help run Tumblr or Automattic, and we were not consulted in the construction of a response to these events." the post also promises renewed prioritization for anti-harassment tools and internal reporting systems.